Its active and large community lets you scale information and allows you to connect with peers easily.Also, you can have an array of customization options as well.

Airflow scheduler software#

It was developed to work with the standard architectures that are integrated into most software development environments.Airflow apache runs extremely well with cloud environments hence, you can easily gain a variety of options.It is extremely scalable and can be deployed on either one server or can be scaled up to massive deployments with a variety of nodes.

Airflow scheduler download#

This one is an open-source platform hence, you can download Airflow and begin using it immediately, either individually or along with your team.

You can easily get a variety of reasons to use apache airflow as mentioned below:

Airflow scheduler professional#

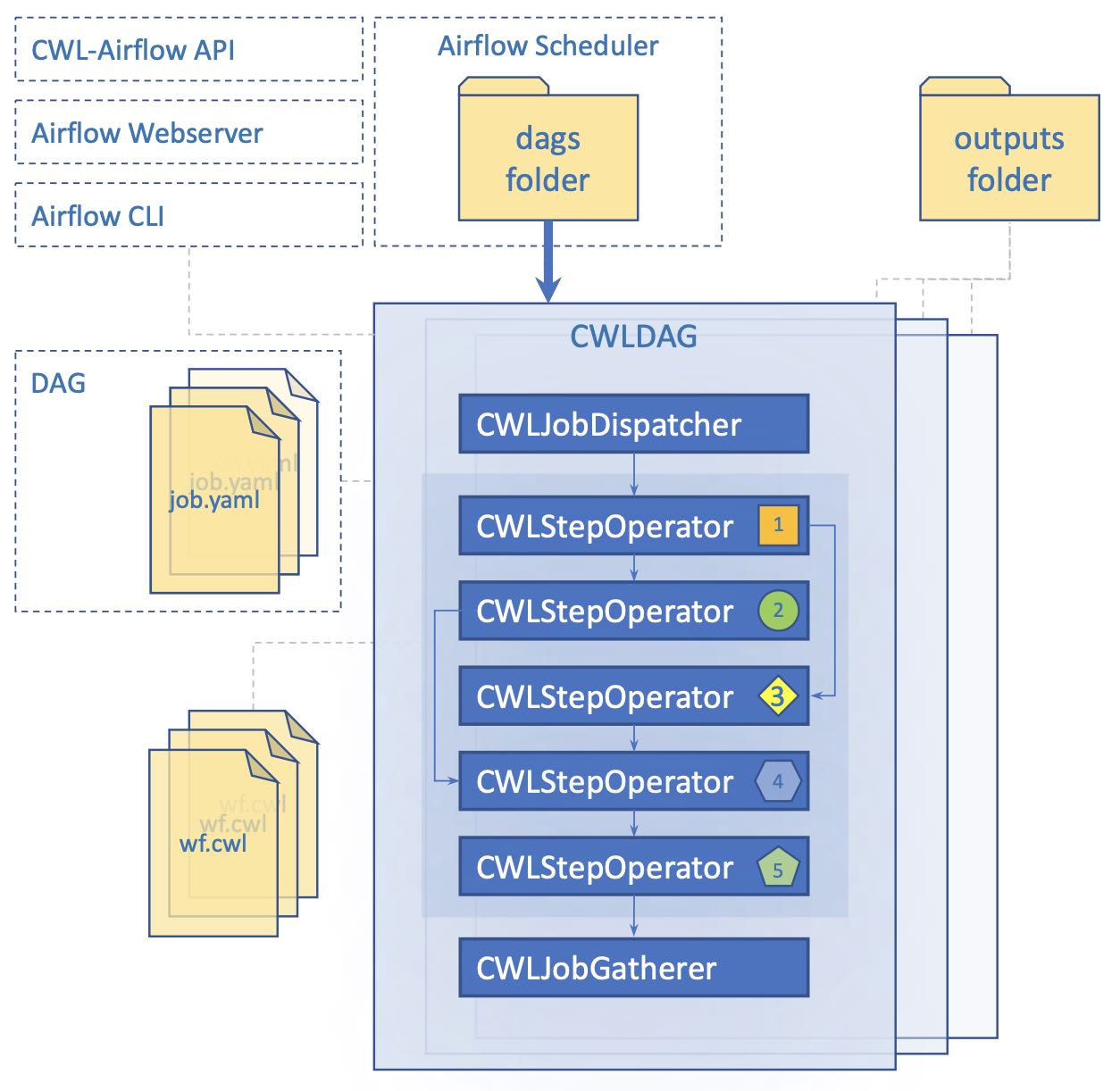

If you want to enrich your career and become a professional in Apache Kafka, then enroll on " MindMajix's Apache Kafka Training" - This course will help you to achieve excellence in this domain. With this platform, you can effortlessly run thousands of varying tasks each day thereby, streamlining the entire workflow management. Also, Airflow is a code-first platform as well that is designed with the notion that data pipelines can be best expressed as codes.Īpache Airflow was built to be expandable with plugins that enable interaction with a variety of common external systems along with other platforms to make one that is solely for you. In simple words, workflow is a sequence of steps that you take to accomplish a certain objective. However, it has now grown to be a powerful data pipeline platform.Īirflow can be described as a platform that helps define, monitoring and execute workflows. Initially, it was designed to handle issues that correspond with long-term tasks and robust scripts. It is mainly designed to orchestrate and handle complex pipelines of data. Table of Content- Apache AirFlow TutorialĪpache Airflow is one significant scheduler for programmatically scheduling, authoring, and monitoring the workflows in an organization. So, if you are looking forward to learning more about it, find out everything in this Apache Airflow tutorial. It simplifies the workflow of tasks with its well-equipped user interface. Written in Python, Apache Airflow offers the utmost flexibility and robustness. Since that time, it has turned to be one of the most popular workflow management platforms within the domain of data engineering. It commenced as an open-source project in 2014 to help companies and organizations handle their batch data pipelines. The lack of an approval process leads us to a 4 hours outage and 2 teams unable to work.If you work closely in Big Data, you are most likely to have heard of Apache Airflow. The change management process exists alone without an approval process to support it. This issue showed us that the development environment is a no man’s land. One week later and we don’t have any issues reported.

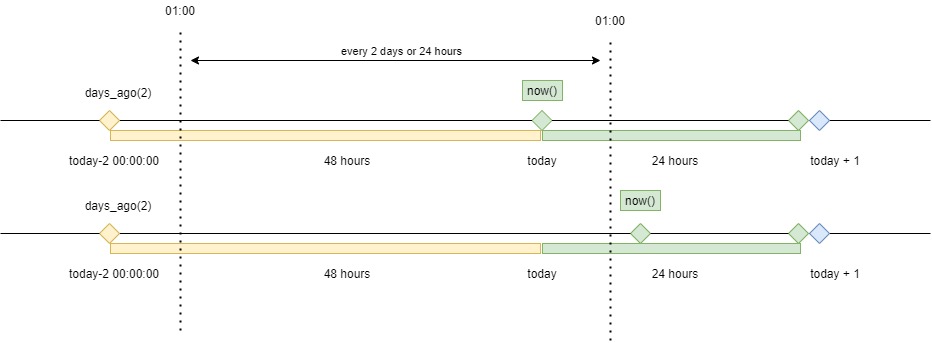

The environment was released to the developers team to check everything is alright. I then changed the Apache Airflow configuration file and set the parameter catchup_by_default to true again. For my surprise, the same parameter was changed one month ago. To confirm the case I checked with change management if we had some change in this environment. This parameter means for Apache Airflow to ignore pass execution time and start the schedule now. In summary, it seems this situation happened when the parameter catchup_by_default is set to False in airflow.cfg file. I then searched for the message in Apache Airflow Git and found a very similar bug: AIRFLOW-1156 BugFix: Unpausing a DAG with catchup=False creates an extra DAG run. I also checked on the airflow.cfg file, I checked the database connection parameter, task memory, and max_paralelism. I checked the DAGs logs from the last hours and there were no errors in the logs. But in this case, it is different because CPU usage was 2%, memory usage was 50%, no swap, no disk at 100% usage. In general, we see this message when the environment doesn’t have resources available to execute a DAG. The DAGs list may not update, and new tasks will not be scheduled. Last heartbeat was received 14 seconds ago. The scheduler does not appear to be running. I noticed every time the error happens, the Apache Airflow Console shows a message like this: Well, after opening some tasks to check Apache Airflow test environment for some investigation, I decided to check Apache Airflow configuration files to try to found something wrong to cause this error.

0 kommentar(er)

0 kommentar(er)